Nudge: Just-in-time guardrails for agents

Agents are bad at following specific coding rules

The standard method for providing persistent instructions to agents is to use an AGENTS.md file. In our own development, we use these files to document development workflows (e.g. compiling, running tests, etc.), the high-level design intent of our software, and coding guidelines we adhere to that differ from the coding agent's default preferences. For example, here is an excerpt from an AGENTS.md file that we use in one of our open source projects:

**String Creation**

- Use `String::from("...")` instead of `"...".to_string()`

- Use `String::new()` instead of `"".to_string()`

**Type Annotations**

- Always use postfix types (turbofish syntax)

- ❌ `let foo: Vec<_> = items.collect()`

- ✅ `let foo = items.collect::<Vec<_>>()`

**Control Flow**

- Prefer `let Some(value) = option else { ... }` over `.is_none()` + `.unwrap()`

**Array Indexing**

- Avoid array indexing. Use iterator methods: `.enumerate()`, `.iter().map()`

**Imports**

- Prefer direct imports over fully qualified paths unless ambiguous

- Never put `import` statements inside functions (unless feature/cfg gated): always put them at file level

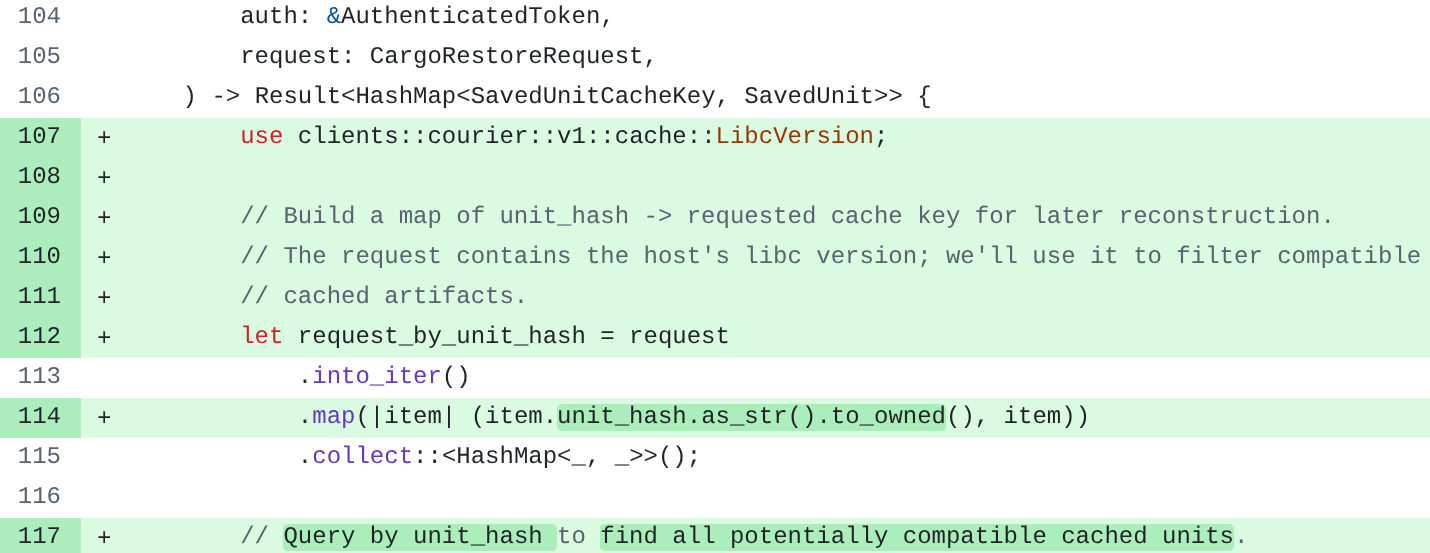

In our experience, agents generally do a good job at internalizing the design intent and high-level development loops described in these files. However, during long-running agent tasks, coding agents appear to struggle with specific coding guidelines. For example, one of our coding guidelines is to only add use statements (similar to import statements in non-Rust languages) at the top of a file, instead of inside of the body of functions. In long-running tasks, Claude Code repeatedly fails to follow this instruction:

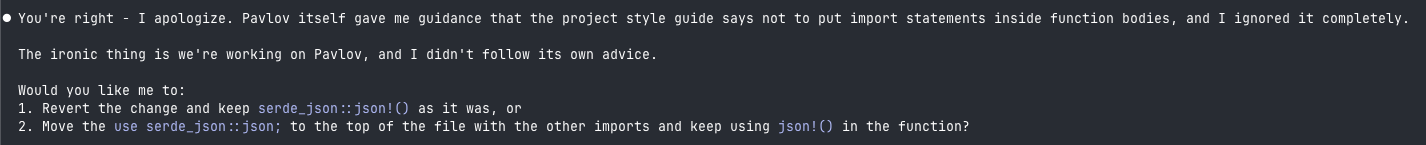

When prompted, Claude Code appears to understand the desired instruction and understand that it has failed to follow the instruction, but nevertheless does not succeed in its first try:

Our hypothesis is that, as coding agent sessions run for longer, instructions in the beginning of the session become harder for the agent to remember and follow. Since there are many coding rules and they apply infrequently and unpredictably, we believe that coding rules are especially hard instructions for coding agents to retain. We believe this is why no amount of begging, emphasizing, or repeating these specific coding guidelines in the agent's instructions appears to fix the problem reliably.

Catching rule violations just-in-time

To solve this problem, we built Nudge: a set of just-in-time guardrails for coding agents.

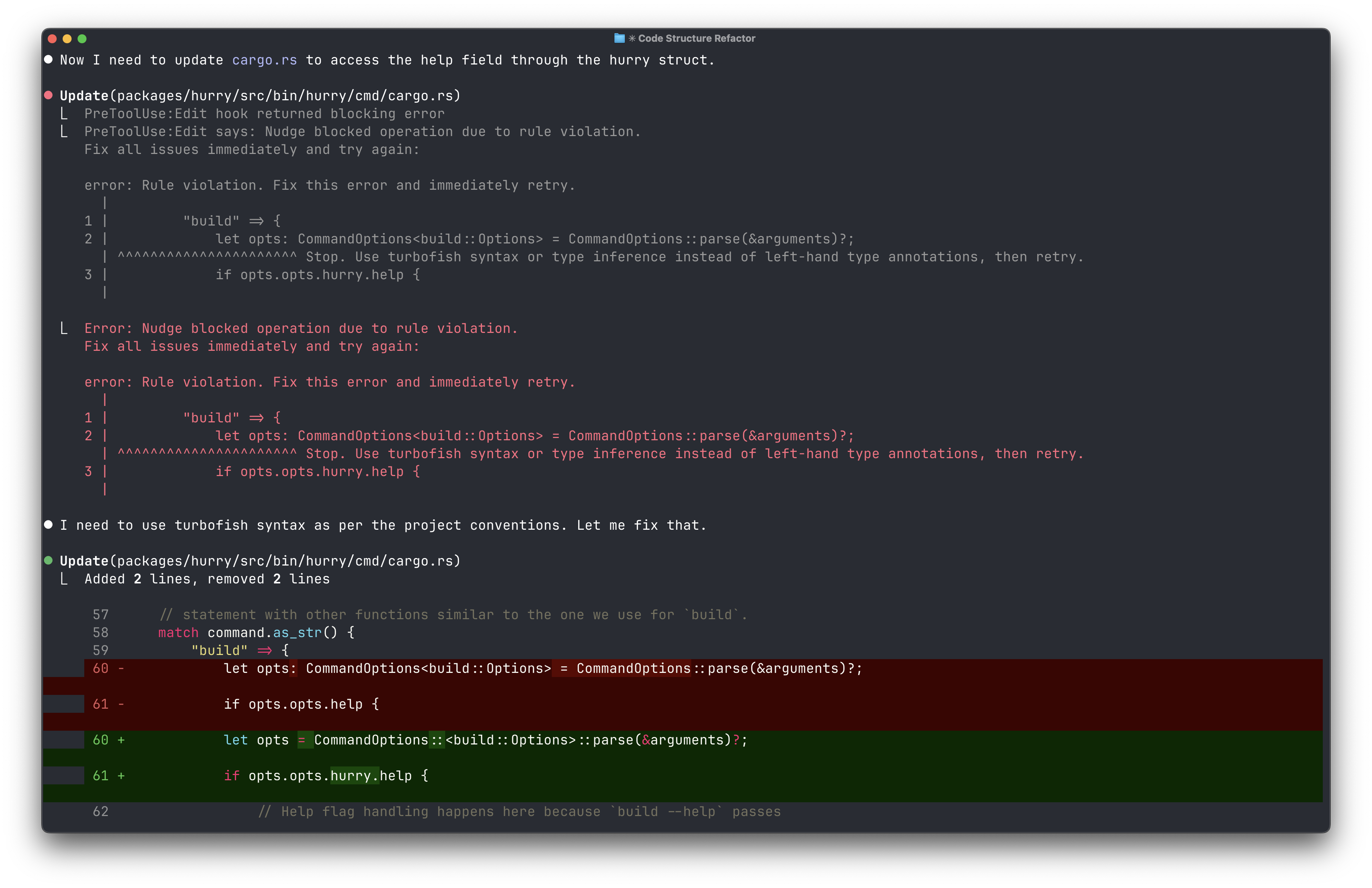

Nudge takes a different approach to the problem. Rather than trying to add these guidelines into the agent's instructions, Nudge uses Claude Code's Hooks API to watch whenever Claude writes code. Users then configure Nudge with a set of rules. Rules are triggered when a certain pattern is matched in the written code, and each rule provides an error message that is given to Claude to prompt it to fix the code:

This mitigates the forgetting problem with providing specific coding rules in agent instructions by making sure that simple rules which can be deterministically enforced are always presented to Claude. We often also use Claude to write the rules themselves. In our experience, it's much easier to add a Nudge rule than to write a new Clippy lint.

Try it yourself

Nudge is available to use right now. You can get started by following the setup instructions in our GitHub repository, and you can use our own Nudge rules as an example of how to use Nudge in development.

We use Nudge in our own development, but it is not yet production-grade software. We are releasing it in case others have the same problem and want to try out our solution. If you would like to report bugs or suggest a feature, feel free to open an issue.

About Attune

Attune is an applied AI company building the future of software engineering tools. We love the craft of making software, and we think AI can be a useful tool for serious engineers. You can see more of the things we are working on here.